Table of Contents

If you’ve ever stared at a JavaScript file and wondered where to even start with testing it, you’re not alone. Most developers agree unit testing matters. But in JavaScript, it often feels like a fragmented mess. Between setup inconsistencies, brittle mocks, and CI jobs that mysteriously fail on Tuesdays, it’s no wonder testing falls to the side when deadlines loom. The intent is there, and what’s missing is a repeatable, maintainable way to begin.

The best test suites aren’t chasing perfect coverage. Instead, they aim for complete confidence in core logic. Yet a 2024 study of 9,129 open-source deep learning projects on GitHub found that 68 percent had no unit tests at all, and those that did were significantly more likely to accept contributions and maintain stability.

Whether your team is running on Jest or Mocha or just figuring out what testing means in a JavaScript project, this guide provides a practical, step-by-step approach.

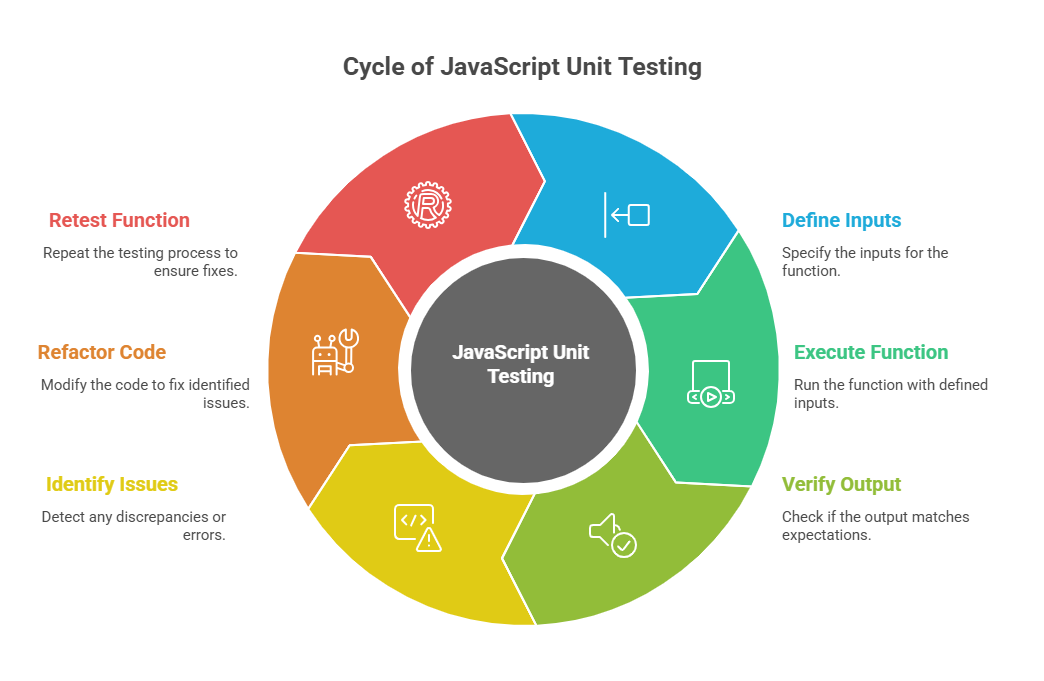

The JavaScript Unit Testing cycle: A visual breakdown of defining inputs, executing functions, verifying outputs, identifying issues, refactoring, and retesting to ensure code quality and reliability

Unit testing in JavaScript focuses on verifying that a specific function or logic block behaves as expected when given defined inputs. It runs that code in isolation, and no databases, no network requests, no framework lifecycle hooks. Just the logic, nothing else.

This kind of testing is especially common in frontend and full-stack projects where utility functions, validation logic, or state transformations need to behave consistently across components. For example, you might test a function that filters product results, calculates totals in a shopping cart, or normalizes API responses before they hit the UI.

Unit tests differ from integration or end-to-end tests by staying close to the code. They’re also distinct from component tests, which verify how pieces of the UI behave together under different states. Unit tests won’t check how different parts of the system interact, rather, they check that the logic itself holds up. This makes them fast to run and precise when they fail. In API-heavy systems, they also support broader efforts to address recurring issues noted by OWASP, like broken access controls or improper input handling.

In practice, unit testing helps teams ship changes without rechecking assumptions every time. You can rename a function, move it into a new module, or optimize an algorithm without wondering if you’ve broken something buried two components deep. The test answers that for you.

Most developers don’t avoid unit testing because they don’t believe in it. They avoid it because it gets messy fast. The setup feels vague, the test structure isn’t obvious, and writing that first test often takes longer than expected. Add a tight sprint or shifting priorities, and testing falls to the side.

In JavaScript projects, things can get especially fragmented. Some tests run in Node, some in the browser. You mock one dependency and suddenly five others break. This fragmentation also opens the door to broader risks, like attackers exploiting familiar themes to lure developers and users alike, as seen in a recent DMV-themed phishing campaign targeting U.S. citizens.

Tech leads feel this too. Enforcing consistent testing practices across multiple teams is hard, especially when half the test files are out of date or written in completely different styles. Without a clear structure, testing turns into overhead instead of leverage. And when that happens, it silently drags on broader developer productivity metrics like delivery speed, bug rework, and cycle time.

Tools like Early Catch work best when these pain points are addressed early. Before automation adds value, you need to make testing something developers can actually stick to. That starts with making it less painful to begin.

Most teams don’t fail at testing because they’re undisciplined. They fail because the cost of writing and maintaining tests creeps up silently. Tests get flaky. Test suites get slow. Expectations get out of sync with business logic. And when confidence drops, the testing culture breaks down.

Step 1: Choose Your Framework and Stick With It

Start with Jest. It’s fast, well-supported, and widely adopted in both frontend and backend JavaScript environments. It comes with built-in mocking, watch mode, snapshot testing, and a smooth DX out of the box.

When to consider alternatives:

- Use Vitest if you're in a Vite-based stack. It offers faster startup and tighter integration with native ESM.

- Use Mocha + Chai only if you’re working in a legacy codebase that already uses them. Avoid introducing them into greenfield projects—they require more configuration and ecosystem stitching.

Standardizing on a single test framework reduces friction for contributors, makes shared tooling easier to maintain, and improves onboarding for new engineers. Inconsistent tooling is a productivity tax that compounds over time.

Step 2: Set Up Tests to Reflect the Codebase, Not Compete With It

Don’t isolate tests in a /tests directory that becomes a dumping ground. Co-locate them with the code they verify. This keeps context tight and makes it easier to see when tests go stale.

Recommended structure:

Use .test.js or .spec.js extensions. Configure your runner to only pick up those files. Avoid deep folder hierarchies—if it takes five minutes to find the test, it won’t get updated when the logic changes.

How to run:

Test discoverability directly affects test reliability. If tests are hard to find, they fall out of sync. If they're hard to run, they get skipped. Structure and naming conventions are the first testing standards worth enforcing across teams.

Step 3: Write Tests That Reflect Behavior, Not Implementation

Unit tests should act like contracts. Given X input, the system should return Y. That’s it. Don’t test internal calls. Don’t test logic branches just to bump coverage. Focus on verifying observable outcomes.

Example:

Tests tied too tightly to implementation details break as soon as you refactor—even when behavior hasn’t changed. That leads to brittle suites and teams avoiding cleanups to avoid “breaking the tests.”

Step 4: Mock Carefully, Not Automatically

When your logic reaches out to billing systems, analytics pipelines, or other internal services, you need to test the decision-making without letting those side effects run.

Take this example: toggling a feature flag for an enterprise customer and logging the event.

To test this, you don't want to write to the real flag store or log system. But you do want to ensure your function triggered both with the right arguments:

Step 5: Treat Test Maintenance Like Feature Work

Most teams write tests with good intent. But over time, broken tests get skipped, test files get outdated, and coverage becomes performative rather than useful. There are practical ways to improve unit test coverage without bloating the suite or chasing superficial metrics.

What helps:

- Use --watch during development. Fail fast, fix fast.

- When a test fails, investigate—don’t just patch.

- Remove tests that no longer reflect product requirements.

- Refactor test names and structure just like production code.

A test suite isn’t a checklist. It’s a system of trust. The best test suites don’t aim for 100% coverage. They aim for 100% confidence in the parts of the system that actually move. The value of a test isn’t in its existence. It’s in how much signal it gives you when something changes.

The hardest part of testing isn’t writing the test but it’s keeping it relevant over time. The practices below aren’t rules for purity. They’re the patterns that tend to hold up when code changes, teams grow, and the test suite becomes part of the infrastructure.

1. Name tests like someone else will read them cold

The test name is the debugger when the failure shows up in CI. A good test title should explain what broke and under what condition.

Good:

Bad:

If your test title doesn’t tell someone what failed without reading the test body, rewrite it.

2. One behavior per test

Tests that assert multiple things tend to fail unclearly. You get a red checkmark, but not the story behind it. Split tests so that each one answers exactly one question.

Bad:

Better:

3. Focus on outcomes, not internals

You’re testing behavior, not implementation. Avoid overusing mocks to assert whether a function was called. Instead, assert the result of calling your code.

Avoid:

Prefer:

4. Use parameterized tests for input variations

If you're testing the same logic against multiple roles, states, or inputs, don’t repeat the test. Use it.each or its equivalent to keep the suite lean and expressive.

These principles won’t eliminate maintenance, but they will give your team a test suite that holds its shape as your system evolves. That’s what long-term reliability actually looks like. It also supports broader engineering goals, like maintaining compliance with internal data handling practices or aligning with an established access control model.

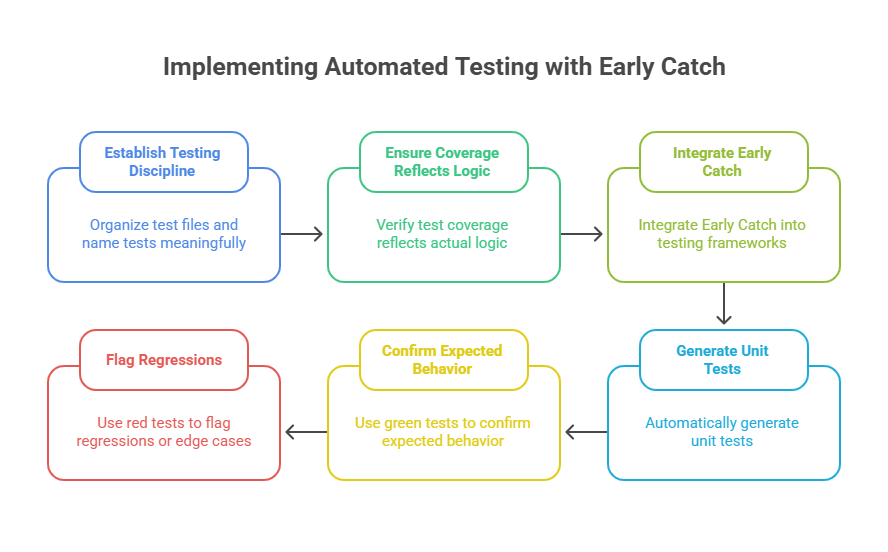

Automated unit testing workflow powered by Early Catch: From establishing disciplined test structures to generating red and green tests for regressions and expected behaviors and built for Jest, Vitest, and Mocha ecosystems.

Testing is where teams define the patterns, structure, and expectations that make automation possible. When test files are organized, test names are meaningful, and coverage reflects actual logic, not just surface interactions, then automated systems can step in without introducing noise.

That’s where agentic AI like Early Catch become a force multiplier. Once your baseline testing discipline is in place, Early Catch can generate and maintain unit tests automatically, green tests to confirm expected behavior, and red tests to flag regressions or edge cases most teams miss. It doesn’t replace engineers. It scales the intent behind your existing test suite.

Early Catch integrates directly into Jest, Vitest, and Mocha-based projects, building on the exact structure laid out in this guide.

Unit testing isn’t about ticking off assertions or inflating a coverage report. It’s about building enough confidence in your code to ship faster, refactor safely, and collaborate across teams without fear of breaking things quietly. The workflow you’ve seen here isn’t complex—but when applied consistently, it changes how teams move.

Once that structure is in place, automation becomes a strategic advantage. Agentic systems like Early Catch build on your foundation, generating and maintaining tests that evolve with your codebase and without adding friction to your team’s workflow.

See how Early Catch helps your team test more without writing more.