Table of Contents

Every service your software touches is a potential point of failure. Maybe it's a form that deletes a record but doesn’t revoke tokens. Perhaps it's an analytics job that works fine until the range input is malformed, triggering a silent failure. API testing is how you catch those failures before your users do.

73% of companies believe a cybersecurity incident will disrupt their business in the next 12–24 months, yet only 3% meet the “Mature” threshold for readiness, which includes stable API and system integration practices.

For teams building across services, with changing schemas and asynchronous workflows, the challenge isn’t writing more tests but gaining the kind of coverage that reflects how their APIs are used.

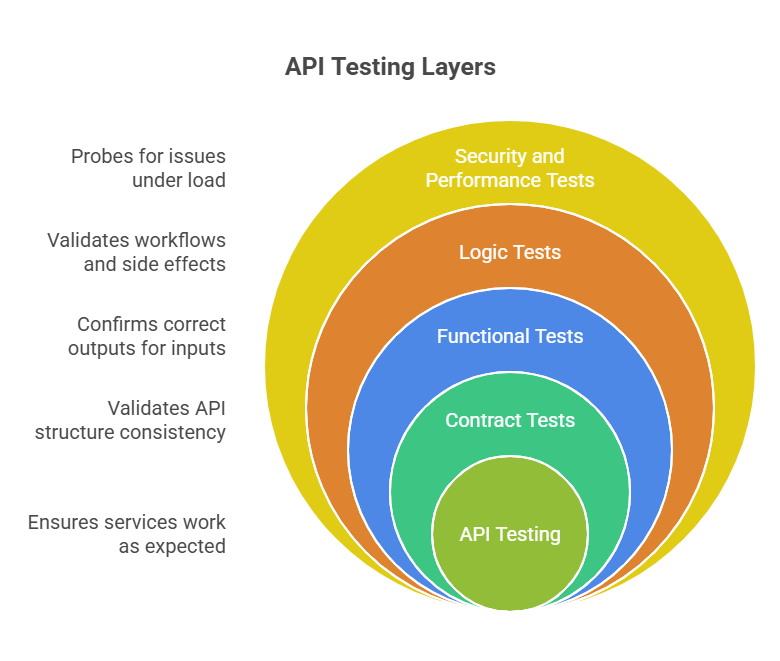

Visualizing the 5 Core Layers of API Testing: From contract validation to performance under load, this diagram breaks down each essential test layer, ensuring robust, real-world API reliability.

API testing is how you determine if your services work as expected. Not in a vacuum, but in production-like conditions, where inputs are messy, data shifts shape, and integrations don’t always behave. It’s not enough to get a 200 OK.

You need to know that what came back is correct, that the shape of the payload matches the contract, that errors are meaningful instead of silent failures, and that the right users see the correct data. It is where most real-world bugs live, like between services, inside auth flows, at the edge of what your schema technically allows, but your business logic quietly breaks. API testing enables you to catch those failures before your users do.

Tests typically focus on different layers of behavior, each targeting a specific kind of risk:

- Contract tests. Catch breaking changes early by validating that your API’s structure, fields, types, and response formats stay consistent across versions and deployments.

- Functional tests. Confirm that endpoints return the correct outputs for a given set of inputs.

- Logic tests. Validate workflows and side effects. For example, does a submitted form trigger the right downstream updates? Does deleting a record cascade properly across related resources?

- Security and performance tests. Probe for rate-limiting issues, broken auth flows, and response degradation under load.

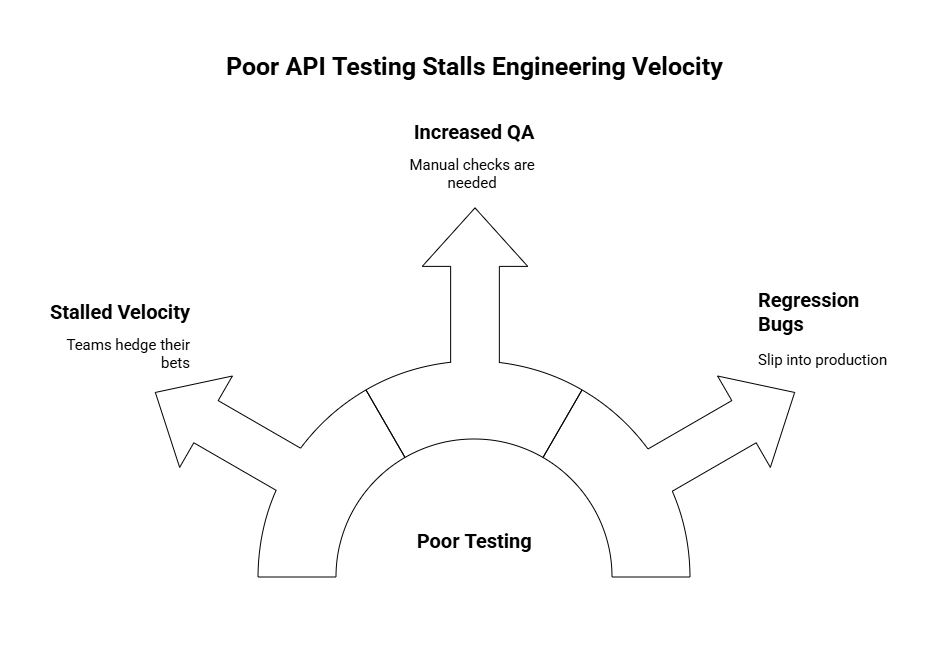

When engineering teams can’t trust that APIs behave consistently, velocity stalls. Instead of shipping confidently, teams hedge their bets. Manual QA gets pulled in “just to check.” Release windows tighten. Regression bugs can slip into production under the radar, especially in services that assume the API layer is stable, as the CI pipeline turns green, a dangerous assumption in the face of rising cyber attacks targeting interconnected systems.

Where unit testing validates internal logic in isolation, and E2E tests trace complete workflows through UI layers, API tests serve as the connective tissue. They operate at the boundary where services interface with each other and with the outside world. This layer is often where bugs surface too late, because logic is assumed to “just work” once endpoints respond. But payload shape, auth scoping, and state assumptions can all silently break without clear test coverage here.

A poor API test strategy has a compounding cost. Over time, it erodes the system's internal credibility. Good API testing does more than protect endpoints. It restores the ability to delegate without fear.

Poor API Testing Stalls Engineering Velocity: This visual highlights how weak test coverage leads to increased QA, slower releases, and hidden regression bugs in production systems.

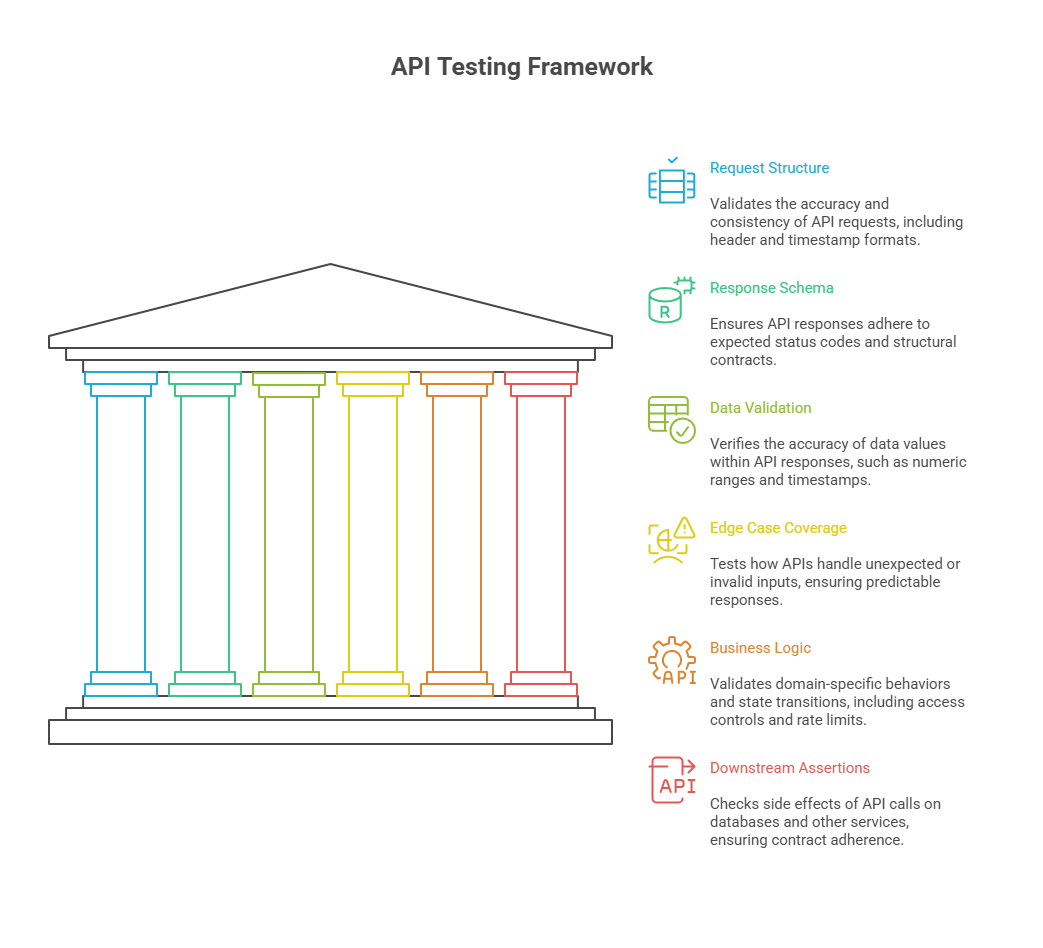

A reliable API test suite isn’t there to prove things work. It’s there to prove that they still work after everything underneath has changed, a foundational goal of good test coverage, which means validating not just what the API returns, but also how it behaves across various inputs, states, and downstream systems. These are the parts worth testing:

1. Request structure

APIs rarely fail because of broken requests. They fail because something almost valid slips through. For example, a timestamp in the wrong format might silently bypass a cache. Or a missing X-Feature-Version header could default the request to legacy behavior. Good tests replicate how real clients call the API and include the inconsistencies.

2. Response schema and status codes

A response must conform to both expected status codes and structural contracts. It includes field presence, data types, nesting, nullability, and the distinction between optional and required fields.

3. Data validation

Field values matter as much as structure. Tests should verify that numeric ranges, IDs, timestamps, and computed fields reflect accurate business logic. Many critical bugs live here, hidden behind a valid shape but incorrect content.

4. Edge case coverage

APIs must respond predictably to unexpected input. This includes malformed bodies, invalid enum values, empty arrays, oversized payloads, and missing parameters.

5. Business logic and state transitions

Tests should reflect domain-specific behavior. For example, deleting a resource should revoke associated tokens. Role-based access controls must prevent unauthorized access, a principle at the core of zero trust architecture. Rate limits, validation rules, and conditional logic need to be exercised under realistic sequences of calls, not just static snapshots.

6. Downstream and side-effect assertions

You need to test side effects whenever an API call writes to a database, emits events, or invokes another service. Without visibility into these downstream behaviors, test suites can appear healthy even while breaking contracts in production.

The Pillars of Effective API Testing: A structured view of the six critical components, request structure, schema validation, edge case handling, business logic, and more, for building resilient APIs.

Most engineering leaders aren’t writing API tests themselves, but they do need to understand how those tests encode behavior. Once you understand the structure of a good test, you can reason about what’s covered, what’s missing, and what’s silently failing. Below are two examples: one manual, one automated.

Manual Test with curl

Imagine an internal API that triggers a usage report job for a customer account. The report is generated asynchronously and delivered via a callback URL when ready.

This test validates basic request structure and response behavior. A typical response:

The visible success belies what often breaks: malformed range values, invalid or unreachable callback URLs, jobs colliding with existing in-progress runs, or payload size limits exceeded during report generation.

Automated Test with Jest + supertest

To catch those cases earlier, here's a corresponding automated test:

It covers the obvious cases. However, what often goes untested is the logic tied to system state: whether a previous job is still running, whether the range violates retention policies, or whether the callback domain is allowed listed. This is where regressions hide, especially during changes to orchestration, queuing, or external dependencies.

This is a single endpoint, and already we’re looking at half a dozen branching conditions. Add a second report type, another auth model, and one more backend system, and your test surface area has doubled. Multiply that across a service mesh or event-driven system, and manual coverage quickly collapses under its weight.

So, how do you validate all of it, and without creating a second engineering org just to maintain tests? That’s the scale problem, and it's what intelligent test systems are now designed to solve. The right coverage tools can help, but only if they align with real system behavior.

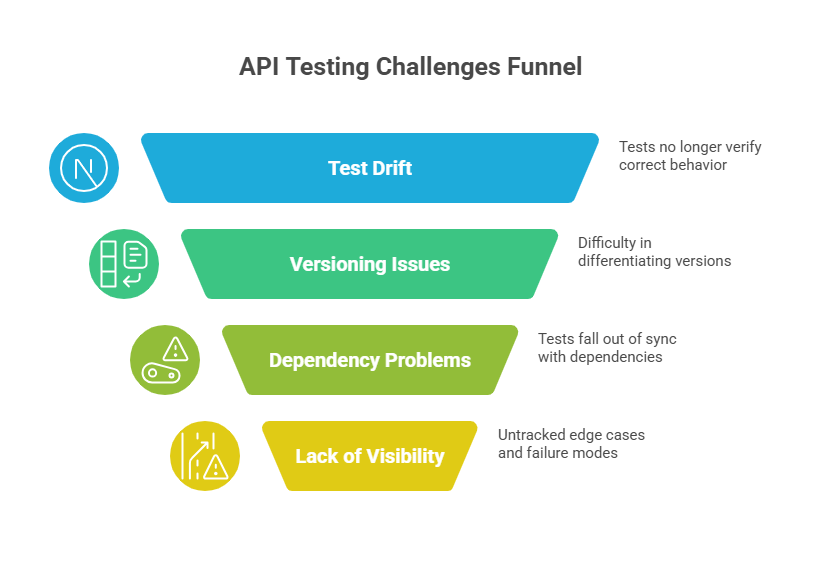

Challenges with Traditional API Testing

Writing a good API test is hard. Maintaining one is harder. As systems become increasingly complex, traditional approaches often begin to break down.

The first issue is test drift. As APIs evolve, teams change parameters, rename fields, and update business logic. Older tests often continue to pass, even though they no longer verify the correct behavior. Without a clear link between test coverage and system intent, these tests create a false sense of safety.

Versioning doesn’t just create overhead, but it also introduces risk that you can't differentiate. Teams ship a small change behind a new version tag, but forget that an internal service still calls the old one. Schema drift becomes difficult to detect because tests are scoped to the current use, rather than legacy assumptions. One version gets tested, while the others remain green.

Dependencies make it worse. A single API call might interact with three services, enqueue a background job, and update a cache. Testing that path means wiring mocks that behave just right or skipping the test entirely. Over time, teams update the orchestration but leave the scaffolding behind. The tests fall out of sync, and no one notices until it's too late in production.

The most dangerous problem is the absence of visibility. Teams are aware of which tests exist, but they rarely know what they’ve missed, a gap often addressed through unified vulnerability management. Edge cases, conditional logic, and failure modes go untracked. When coverage reporting is shallow or incomplete, risk accumulates quietly.

API Testing Challenges Funnel: From test drift to lack of visibility, this funnel illustrates how common issues compound and threaten test reliability as APIs scale.

Manual API testing becomes ineffective when the number of endpoints increases, logic shifts mid-sprint, or downstream behavior changes without notice; the old methods fail to keep pace. This is where Early API steps in.

Early API doesn’t just run tests. It acts as an agentic layer by observing how your services behave, what they expose, and what to test. Instead of requiring developers to anticipate every condition and edge case, Early API infers intent from real-world usage and architecture. It generates meaningful tests based on how systems function, not just how the documentation describes them. Early API doesn’t wait for a failed integration test or a Slack message from another team. It flags the drift as soon as it shows up.

You can't build reliable systems on guesswork. Testing APIs isn’t just about catching errors, it’s about making behavior visible, predictable, and trusted. That’s what lets teams move fast without breaking integrations, and what lets leaders delegate without second-guessing what’s quietly falling through the cracks.

Manual testing is a start. It teaches what correctness looks like. But scaling that model across fast-moving services, evolving schemas, and versioned endpoints breaks down quickly. Early API rewrites the rules. It observes real service behavior, surfaces integration flaws before they become visible, and scales testing across APIs without requiring teams to handcraft every edge case. It flags what others miss - the logic gaps, schema mismatches, and cascading errors.

If your engineers are spending more time debugging regressions than shipping features, it’s time to change how you think about testing.

Discover how Early API enables your team to extend trust across systems without slowing anyone down.