Table of Contents

Code review is meant to build confidence, yet more often it adds friction through the back and forth that slows delivery and drains energy from the team. This growing pain in code review have made AI-enabled review so appealing. Instead of human reviews carrying the entire load, these LLM driven tools can automate the repetitive tasks such as generating tests, surfacing risks, and flagging bugs before they reach production.

The latest Work Trend Index reports that 75 percent of global knowledge workers now use generative AI, nearly double the share from just six months ago.

AI code review promises relief from review fatigue and the opportunity to accelerate delivery without compromising quality. Many teams adopt AI review only to find out it was not well integrated into their workflow. The tools are powerful, yet execution falls short.

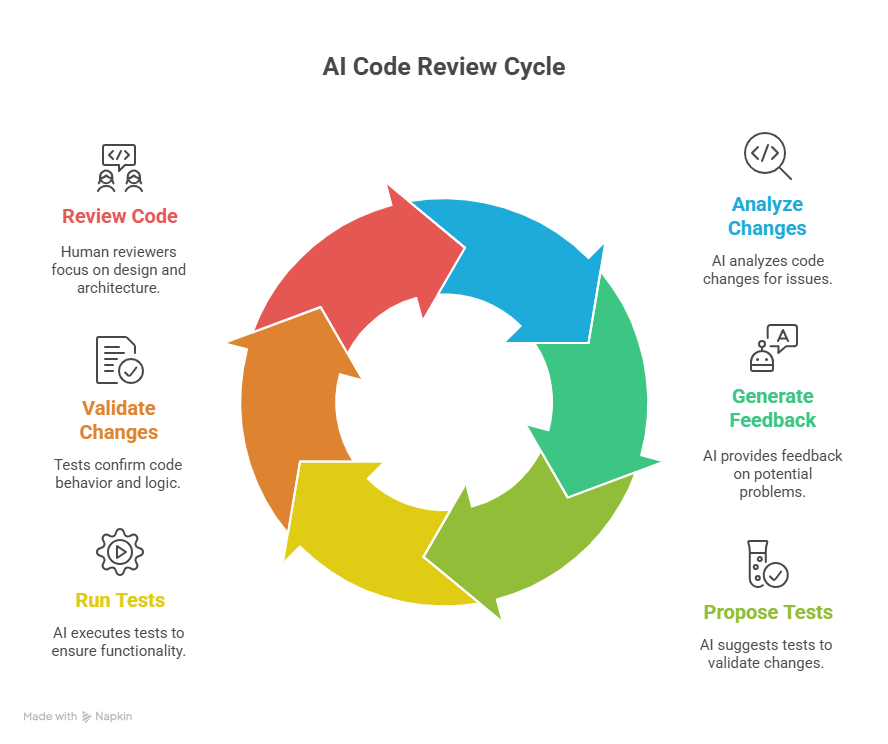

AI Code Review Cycle

AI code review is the use of machine learning models to analyse changes, generate meaningful feedback, and propose tests before a human ever leaves a comment.

Automated test generation fits the AI code review workflow as the evidence layer that validates what a pull request actually does. During a review, an AI system can do more than point out style issues or potential bugs; it can create and run unit and integration tests for the exact changes in the diff. Those tests become part of the pull request checks, giving reviewers proof that existing behavior still holds and that new logic is exercised.

This closes the common gap where code “looks fine” but fails in production because no one wrote new tests or updated old ones. By adding automated test generation to the same pipeline that handles static analysis and inline comments, AI review delivers both guidance and verification. Reviewers can focus on architecture and design choices while merging code that already carries its own safety net.

The challenging part of adopting AI code review is making it stick. Many teams jump in only to run into the same traps that stall adoption and frustrate developers. A strong starting point is to review your broader security posture and understand the exposure management landscape. Here are the top four pitfalls of using AI in your code review process.

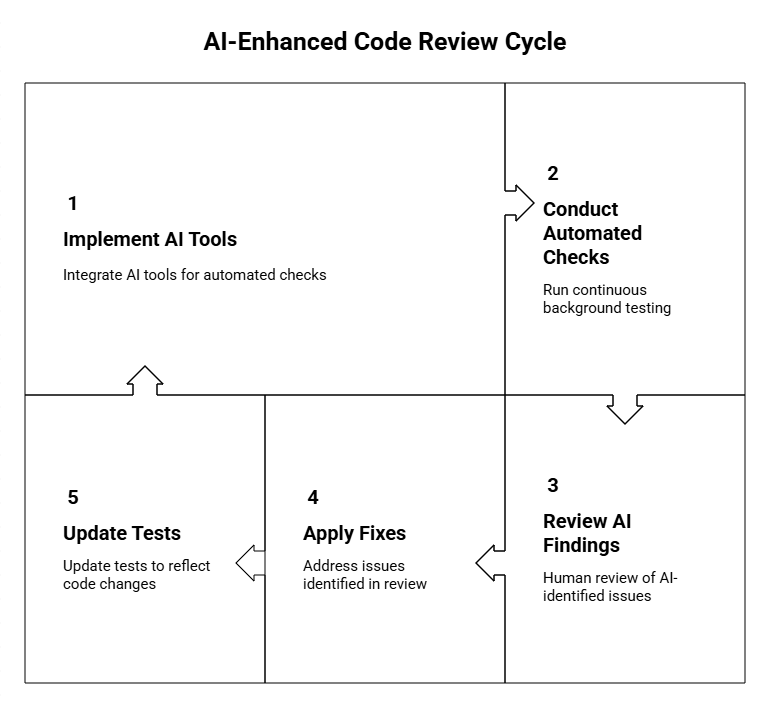

AI code review process when using tools

1. Treating AI like a silver bullet

Automated review adds real value when it supports, rather than replaces, the development team. Tools that maintain test coverage and update checks as code changes reduce the grind of repetitive fixes and help developers focus on architecture and business logic. Instead of relying on static scans that age quickly, agentic driven AI like Early Catch quietly keep the safety net current so edge cases and regressions are less likely to slip through.

The most effective process pairs that continuous background testing with thoughtful human review. Developers still decide how a change fits the product and spend less time chasing stale tests or rerunning manual checks. The result is faster delivery, steadier quality, and a review culture where automation handles the routine while engineers handle the judgment.

2. Poor integration

When code reviewers must leave their IDE or CI/CD pipeline to check results, the insights arrive too late and quickly fade into the background. A new tool delivers little value if it sits in a separate dashboard and is disconnected from daily work. Teams that integrate automated review directly into pull requests and build workflows see far higher adoption because feedback appears in the same place and when developers are making changes.

3. Ignoring coverage gaps

Standard AI code review catches style slips, syntax errors, and common security issues, but it does not evaluate business logic or predict how a feature will affect downstream systems. In a code review workflow, this means a pull request can pass automated checks while leaving critical paths untested. The fix is to combine traditional AI with agentic AI such as Early Catch. When a developer opens or updates a pull request, Early Catch generates targeted unit tests for the changed code, runs them during the build, and posts the results directly in the review. Reviewers see fresh test outcomes alongside their normal comments, so logic and intent are verified before approval. This approach keeps reviews fast while ensuring that coverage gaps are closed as part of the standard review cycle.

4. Manual triggers only

Many review tools require a developer to decide when to run them, turning quality checks into a separate chore. This slows feedback, creates uneven coverage, and often means important changes are merged before a scan ever happens. Effective solutions run automatically whenever code changes. Integrating checks directly into pull requests and the CI/CD pipeline ensures that every commit, branch update, and merge request is scanned without a second thought. Automatic execution also produces a consistent audit trail, which helps teams meet compliance requirements and makes it easy to spot patterns in recurring issues. The result is a review process that stays fast, reliable, and free from the gaps that manual scheduling leaves behind.

To get lasting value using AI code review, you must be deliberate about how it aligns with your team, your technology stack, and your development process. Here’s a practical playbook for making it work.

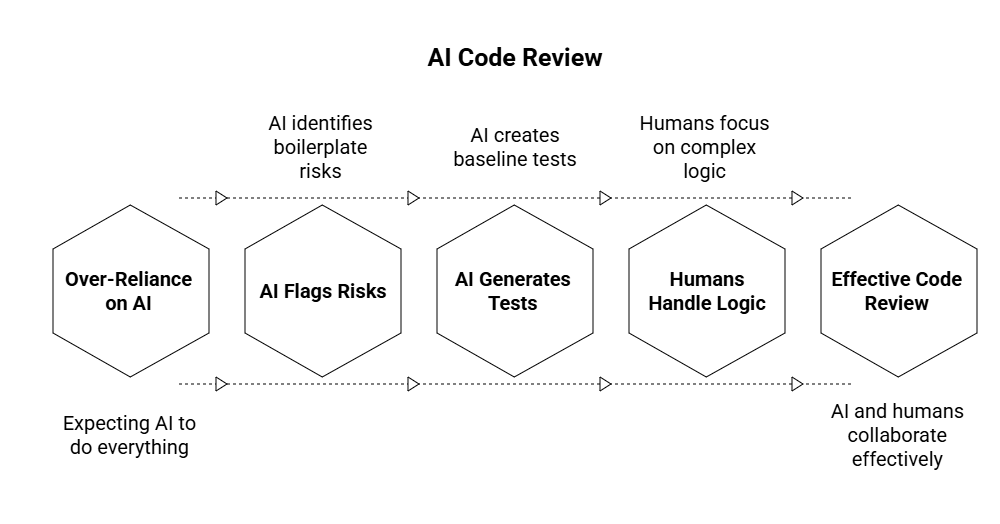

1. Define the role of AI vs humans

One of the biggest mistakes teams make is expecting AI to do everything. The smarter approach is to make AI police style, flag boilerplate risks, and generate baseline tests while humans handle architectural decisions, business logic, and debating trade-offs. In practice, this means allowing the AI to catch a missing input check, while reviewers focus on whether the validation effectively meets customer needs.

2. Pick tools that fit your stack

AI code review delivers lasting value only when it aligns with the way your team builds software. The best tools plug directly into the places where code is written and merged, such as version control for pull requests, the CI/CD pipeline for enforcement and IDE for local checks, so feedback arrives in real time.

Generic “works for every language” platforms often fall short because they miss ecosystem-specific patterns and optimizations. For example, a JavaScript-heavy team gains far more from a reviewer trained on Node.js idioms and React conventions than from a broad but shallow engine. Choose AI that understands your frameworks and workflows so developers stay in one environment and the review fits naturally into the development process.

3. Build trust in AI driven code reviews

AI review can speed delivery only if developers believe the feedback reflects how their code really behaves. That trust comes from a pipeline that does more than run static scans. During a pull request, conventional AI can highlight style, syntax, and known security issues, but the review stays incomplete until the changes are exercised in context. Integrating an agentic layer that automatically writes and executes targeted tests for the new code closes that gap and gives reviewers concrete results to check. When each pull request arrives with fresh tests and pass–fail outcomes, developers can verify recommendations against live evidence instead of treating a green check as the final word. Teams can further strengthen this by applying practices such as component vs. unit testing and using strategies to improve unit test coverage.

AI code review spectrum

4. Close the coverage gap with agentic AI

The hardest part of code review is not spotting style issues but proving that the code still behaves as intended. Conventional AI tools can flag what looks wrong, yet they rarely confirm that what looks correct will continue to work under real conditions. Adding a structured root cause analysis process helps teams act quickly when AI uncovers hidden defects. That leaves a gap between visual approval and functional assurance. Agentic AI changes the equation by generating and running targeted tests the moment a pull request is opened. It validates current behavior with passing tests and explores edge cases with failing tests, so reviewers see evidence instead of guesses.

Because these systems run automatically whenever code changes or critical paths are touched, they keep coverage fresh without extra steps from developers. For example, when a new payment workflow is submitted, the agent creates tests to confirm discount rules still apply and attempts to break the logic with expired coupons. Reviewers merge with concrete proof of stability rather than a simple “looks good,” giving the team confidence that production will match expectations.

Initial versions of AI code review acted like copilots, flagging style slips, spotting risky code, or suggesting small rewrites. Helpful, yes, but always reactive and dependent on a human trigger. The evolution now underway is toward tools that act on their own, running proactively when coverage dips or when sensitive parts of the codebase change. Instead of waiting for developers to ask, these systems anticipate where attention is needed and surface proof through tests, not just opinions.

Agentic AI testing counterbalances that tendency by automatically generating green tests to preserve existing behaviour and red tests to probe for hidden bugs, ensuring that code born from rapid experimentation is still subjected to rigorous validation. Teams experimenting with this style can benefit from tooling designed to support vibe coding while maintaining code quality.

That shift changes the human role. Reviewers will focus more on design trade-offs, performance implications, and long-term maintainability, areas where vibe coding often falls short. Vibe coding, common among junior developers, moves quickly and relies on intuition but rarely considers architecture or long-term robustness.

It is also where compliance and security expectations are heading. Organisations will need to show that every release has been systematically tested, not just subjectively reviewed. AI augmentation makes that achievable without burning out developers. For teams managing regulated data, understanding concepts like Controlled Unclassified Information (CUI) adds another layer of compliance consideration. We are already seeing this future in action.

AI code review is about making development and deployment faster, sharper, and less draining. The teams that get it right are the ones that define clear roles for AI versus humans, integrate tools into their existing workflows, build trust in the feedback loop, and let automation handle the trivial tasks. The final step is closing the coverage gap, where most tools still fall short.

If you’re evaluating adopting AI review for your team, ask yourself this - does it just comment on code, or does it also provide evidence through test coverage? That’s where the next wave is heading, and where Early Catch already delivers. Book a demo today.