Table of Contents

Testing has not kept pace with the speed of modern releases. Teams are pushing code faster, across more services, and into production environments that change daily. Tools like Copilot and prompt-based generators help at the margins. They wait for a prompt, suggest a snippet, or display a coverage number that hides more than it reveals, but they don’t manage coverage drift or maintain suites as code changes. That responsibility still falls back on engineers, and at scale it becomes unmanageable.

About 42% of enterprise-scale organizations have actively deployed AI in their business, while another 40% are still exploring or experimenting with its usage.

Let’s look at how this shift is already playing out and what it means for velocity, reliability, and the leverage of engineering teams.

Prompt-based tools can generate a test or two on request, but they still rely on someone to identify the gap, ask for the output, and verify it manually. That workflow works in small doses but collapses under the pace and complexity of modern software delivery. Testing is unusually well-suited to full autonomy because:

- The objectives are clear: verify specific behaviors, catch regressions, and maintain coverage

- Inputs and expected outputs are clearly defined

- Pass/fail states are unambiguous

- Execution can be automated and repeated at scale

These characteristics allow an AI system to run continuously, detect where coverage is missing or outdated, and update tests without interrupting development. The scale and speed of current development make this level of autonomy practical and, in many cases, necessary. Large, distributed codebases change in small but constant ways. Mixed legacy and greenfield code have made traditional testing workflows harder to sustain. When an AI system owns the testing process, it can respond at the same frequency as the changes themselves, keeping the safety net accurate while the code underneath it shifts.

Manual test writing leaves gaps, and traditional automation only scales so far. AI changes that equation. By analyzing code, patterns, and production behavior, it can generate, prioritize, and evolve tests in ways that humans alone can’t keep up with. Here are eight practical ways AI is reshaping software testing today.

1. Generating unit tests automatically

Before AI-assisted testing, unit test coverage relied on engineers writing each case manually, often under deadline pressure. That left edge cases and error paths underrepresented. This is where automated unit testing practices offer a strong foundation, ensuring consistency while AI accelerates coverage expansion. Now, AI models analyze function logic and generate both success-path (green) and failure-path (red) tests in seconds. They pick up on conditions that are often missed, such as null handling or unanticipated input ranges.

They can be produced directly in the IDE while code is written or in CI pipelines as changes are committed. The result is faster coverage expansion without diverting hours from core development work. Teams can maintain quality even when release schedules are tight.

2. Closing code coverage gaps

Coverage dashboards reveal things like untested branches, untouched error handlers, or functions that have never been exercised in tests, but they rarely help you fix those problem areas. For teams evaluating different penetration testing as a service (PTaaS) providers, this type of automated coverage can complement external security testing.

AI systems can scan for untested or lightly tested sections, then generate targeted tests to fill those gaps. This includes drawing on historical data to track which areas repeatedly fall out of coverage, much like traditional code coverage tools surface blind spots across a growing codebase.

3. Maintaining tests as code evolves

As code changes, tests frequently become outdated. They might be calling deprecated functions or missing new parameters. The result is brittleness and a rise in false failures.

AI can detect when a test no longer matches the current implementation and regenerate it automatically. The lag between implementation changes and test updates is removed. This reduces flakiness, keeps CI runs reliable, and allows engineers to focus on building features instead of spending hours rewriting stale test cases.

4. Testing API contracts and logic

When APIs change, the risk of breaking downstream integrations is high, especially if contract tests aren’t updated at the same time. Similar to following an essential API security checklist, keeping contract tests aligned ensures endpoints behave as expected and remain resilient against integration risks. AI can detect changes by inferring schemas from specifications or observing live request-response patterns. It then generates both integration and failure-path tests to cover the new behavior.

AI secures APIs with unit, contract, load, and end-to-end tests.

Integrated with tools like Early API, this happens automatically as endpoints evolve, so tests reflect the current reality rather than outdated assumptions. Teams get immediate feedback on schema mismatches, validation errors, or altered logic before changes reach consumers, preserving reliability across interconnected services.

5. Identifying risky code paths automatically

Not all code carries equal risk. Deeply nested logic, rarely executed branches, or areas with a history of defects deserve more attention than well-tested utility functions. AI can analyze code complexity, execution frequency, and historical bug data to flag these risk zones.

It then generates targeted tests to cover edge cases or failure modes that might otherwise be overlooked. This moves testing from a blanket approach to one guided by actual risk, raising the odds of catching critical issues without bloating the test suite unnecessarily.

6. Preventing regressions in PRs

Even small pull requests can introduce regressions if they touch fragile parts of the codebase. In the same way that organizations adopt identity lifecycle management tools to keep user access aligned with constant changes, automated regression testing ensures code remains stable as it evolves. AI can intercept these changes during review, reasoning about the modified logic and generating red-path tests to simulate likely failure conditions/

In setups that run Early Catch in CI, this happens before merge, giving engineers immediate visibility. They can see the exact scenarios that would break under the new code and address them, reducing the likelihood of post-merge rollbacks or emergency fixes in production.

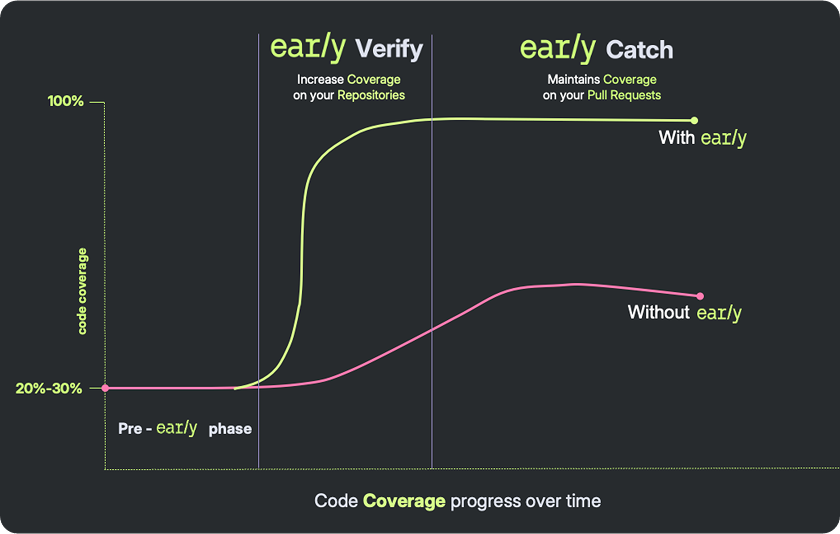

Early lifts coverage and prevents regressions automatically.

7. Reducing developer burden without reducing test quality

Under delivery pressure, test writing is often the first thing skipped, but this leaves the codebase vulnerable. AI eliminates much of the manual overhead by producing high-quality tests that follow established patterns while still adapting to the code that is currently under review.

Similar to how AI test automation tools streamline repetitive quality checks, these systems free developers to focus on design and implementation. Developers can keep their attention on design and implementation while AI handles the repetitive aspects of test creation.

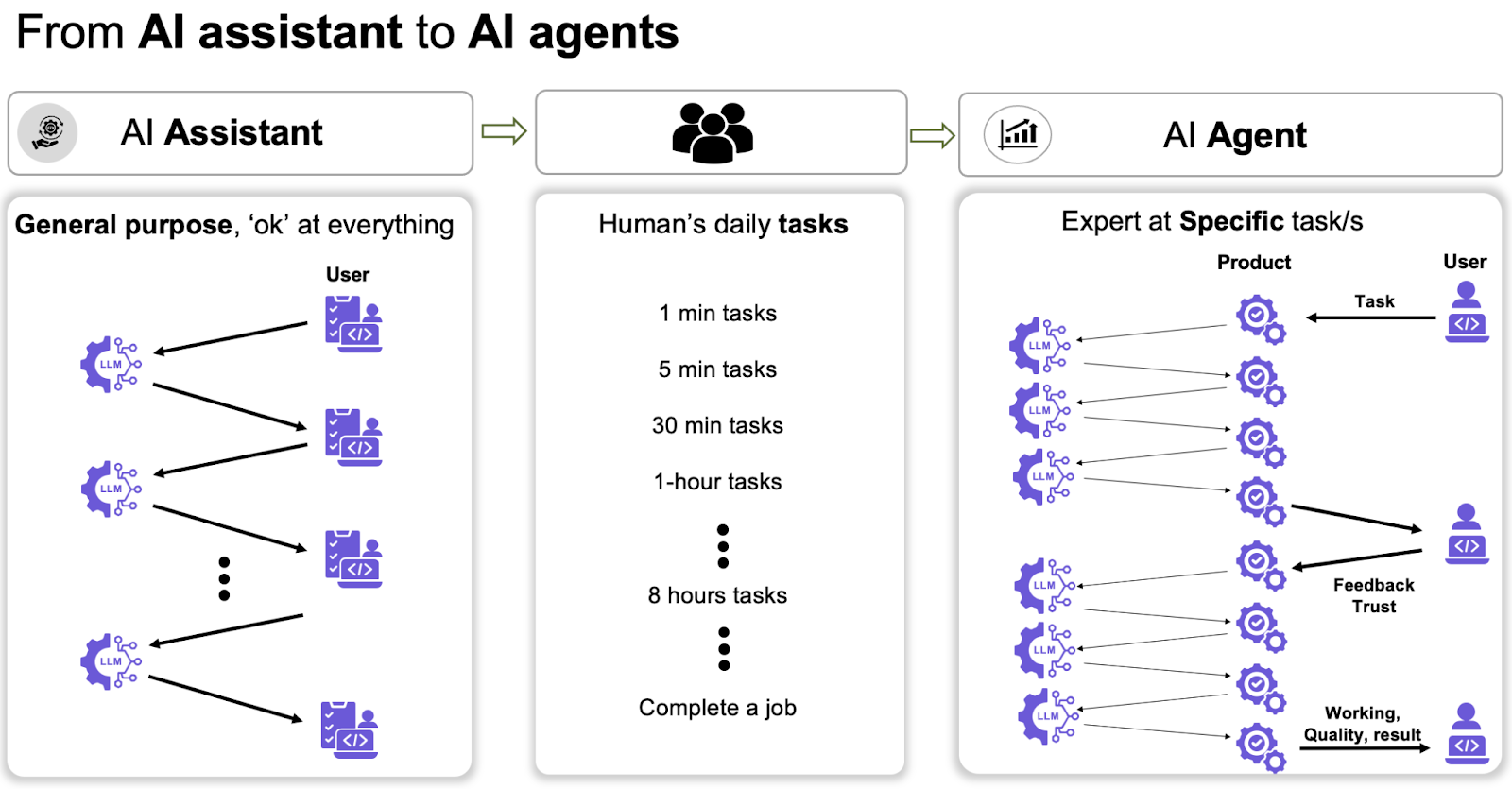

Autocomplete tools can write tests, but the work stops there. They do not decide which parts of the codebase need attention, they do not maintain tests when code changes, and they do not validate outcomes. The responsibility for coverage still falls on engineers.

AI agents move beyond assistance, owning test coverage end to end.

Agentic systems approach the problem differently. They run continuously in the background, scanning repositories, watching commits, and generating or repairing tests on their own. Instead of waiting for a prompt, they respond in real time to changes, update tests that no longer match the current logic, and make sure that regressions are surfaced before they reach production.

Multi-service architectures, rapid release schedules, and sprawling repositories cannot be supported by tools that only assist on request. Speed and scale are the differentiators. This turns testing from a manual chore to an embedded part of the delivery process.

For teams already hitting the limits of assistive tools, this move to fully autonomous testing is the only way to ensure quality isn’t lagging behind deployments.

When choosing an AI testing platform, it helps to think about how it will perform under real delivery conditions. Here are the 8 key criterias.

- Speed – Generates and executes tests quickly enough to keep pace with your team’s release cadence.

- Scale – Handles entire repositories, from small services to complex multi-service architectures.

- Legacy and new code – Works just as effectively on legacy modules with no prior tests as it does on freshly written features.

- Autonomous operation – Works without constant prompting, managing test creation and maintenance independently.

- Breadth of coverage – Supports unit, logic, and integration testing in a single system.

- Coverage maintenance – Keeps test suites accurate by adapting them automatically to match new or refactored code.

- Trend tracking – Monitors coverage, stability, and defect detection to guide long-term quality efforts and avoid brittleness.

- Seamless integration – Works inside your CI pipelines, IDE, and version control without disrupting existing workflows.

The real shift in AI testing is about ownership. Copilot-style tools can suggest tests, but they leave the hard parts on the team. Things like maintenance, accuracy, and alignment with code changes. Agentic systems take testing off the developer’s plate entirely. They can generate, maintain, and execute tests at the pace, scale, and reliability that modern codebases demand.

Early’s platform was built for this shift. By embedding into your CI/CD workflow, learning your codebase, and keeping coverage aligned with every change, it turns testing into a continuous, autonomous process. The result is consistent quality without constant oversight.

If your team is ready to move from assistive tools to AI that takes full responsibility for testing outcomes, explore how Early fits into your workflow.