Table of Contents

- Code coverage is not test quality. It measures how much code is executed, not how well tests detect real bugs.

- Mutation testing reveals the truth. By injecting small code changes (“mutants”) and checking whether tests catch them, we can measure effectiveness, not just presence.

- Testing every method matters. As more methods were tested in our benchmark, both mutation score and coverage rose sharply, 100% coverage led to 100% mutation score.

- EQS (Early Quality Score) combines three key metrics

- Code coverage

- Mutation score

- Method-scope coverage - measures how many public methods have dedicated tests, unit, component, or API-level, written (or generated) specifically to validate that method’s behavior, coveraging 100% of the method’s code.

- Why it matters: EQS provides a framework for evaluating AI-generated tests and autonomous test agents, distinguishing between tests that merely exist and tests that truly safeguard code.

It would be ideal if software were developed without bugs. In reality, bugs are introduced every time new code is written, and this is getting 100× worse in the era of AI-generated code, at least for the foreseeable future.

To protect our code from regressions, tests were invented. But our goal isn’t just to write good tests, it’s to build high-quality software.

So how can we tell if our tests truly prevent bugs and cover all scenarios, including edge cases?

Traditionally, we’ve answered this with one metric: code coverage.

In this post, we introduce a new way to measure a method’s test quality, EQS (Early Quality Score), which combines coverage, mutation score, and a new criterion: method-scope coverage.

Code coverage measures the percentage of code executed during tests.

If coverage is zero, there are no meaningful tests. But even with high coverage, say 80%, the quality of tests might still be low if they don’t cover edge cases or detect real faults.

Another common gap: tests are typically written for public methods but don’t always reach private ones, where much of the logic resides. As a result, high coverage on paper doesn’t always mean the code is well-tested.

Mutation testing introduces small changes, or mutations, into the code, for example, flipping logical operators or altering constants, to see if existing tests detect the changes.

If a test fails due to a mutation, the mutant is killed, meaning the test suite caught the bug. If it doesn’t fail, the mutant survives, showing a potential gap in test quality.

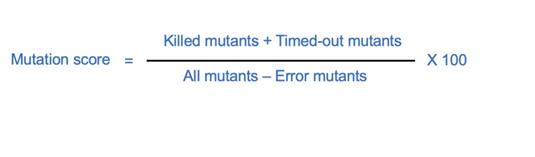

The mutation score quantifies this:

A high mutation score means your tests don’t just run the code, they validate it.

We use Stryker to measure this in a real-world benchmark.

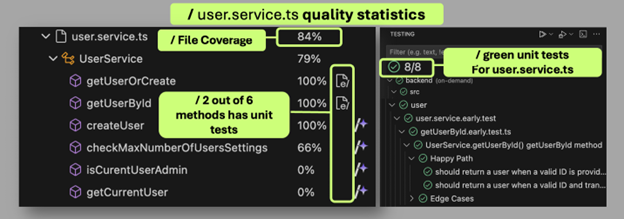

In our backend repository, the file user.service.ts handles various user operations.

Initially, only 2 of 6 methods in the UserService class had tests, 8 passing unit tests in total.

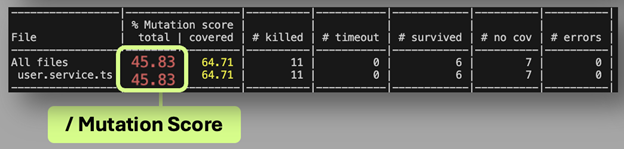

Coverage looked decent at 84%, but the mutation score was just 46%. That means nearly half the possible code changes (mutants) would go unnoticed by existing tests, a clear signal of test quality issues despite strong coverage numbers.

To improve test quality, we added tests for all six methods.

The results speak for themselves:

As more methods were tested, both coverage and mutation score increased dramatically. The takeaway: testing every method individually, not just at the component or integration level, directly improves test quality.

After analyzing hundreds of such cases, we realized test quality can’t be measured by a single metric.

So we created EQS (Early Quality Score) — a composite measure that evaluates tests across three dimensions:

- Code coverage – % of code executed by tests

- Mutation score – % of mutants caught by tests

- Method-scope coverage – % of public methods that have direct tests (unit, component, or endpoint) achieving 100% coverage for that method.

EQS=(Coverage)×(Mutation Score)×(Method-Scope Coverage)

This means if any of the three is zero, EQS drops to zero, emphasizing that true test quality depends on all dimensions working together.

At the project level, method-scope coverage measures how many public methods have dedicated tests, unit, component, or endpoint, that fully cover their logic.

If you have 100 public methods but only 10 of them are directly tested at 100% coverage, your method-scope coverage is 10%.

Below is the data from our user.service.ts benchmark:

Note: Coverage of the tests for each method (once created) summed up to 100% for that method, whether unit, component, or endpoint tests, hence that method was included in the method-scope coverage for this file.

As you can see, as method coverage increased, mutation score improved, and EQS rose consistently, from 0% to 100%.

EQS provides a unified way to evaluate test quality.

It captures how much code is tested (coverage), how effective the tests are (mutation), and how broadly the testing spans the codebase (method-scope).

For teams using AI or autonomous agents to generate tests, EQS becomes an essential metric, it distinguishes between tests that exist and tests that actually work.

We believe EQS can help redefine how organizations measure quality.

As testing shifts from manual creation to autonomous generation, EQS offers a framework to measure and trust the quality of AI-generated tests organization-wide, and at the engineering leadership level.

In time, software won’t just be tested, it will be self-validated, continuously and autonomously, before it ever reaches production.

EQS = The new standard for measuring real test quality.