Table of Contents

At enterprise scale, AI-generated code introduces a structural imbalance. Development velocity increases faster than trust, testing, and long-term maintainability can scale alongside it.

This imbalance, rather than code correctness, is what ultimately slows enterprise adoption.

Many enterprise teams are already experimenting with AI-generated code.

MIT report shows that 95% of generative AI pilots at companies are failing. Why is that?

In early stages, the results are impressive. Developers move faster, experiments take minutes instead of days, and the cost of trying new ideas drops significantly.

The challenge emerges when AI-generated code moves from experimentation into production systems. At that point, the question is no longer whether the code works in isolation. Most of the time, it does. The real concern is whether teams can confidently understand, validate, and evolve that code over time.

This is where adoption begins to stall. Not because enterprises resist AI, but because speed without verification introduces risks that production environments cannot tolerate.

This use case examines where AI-generated code fits within enterprise software development, where it begins to break down, and why testing, coverage, and long-term maintainability become the true limiting factors.

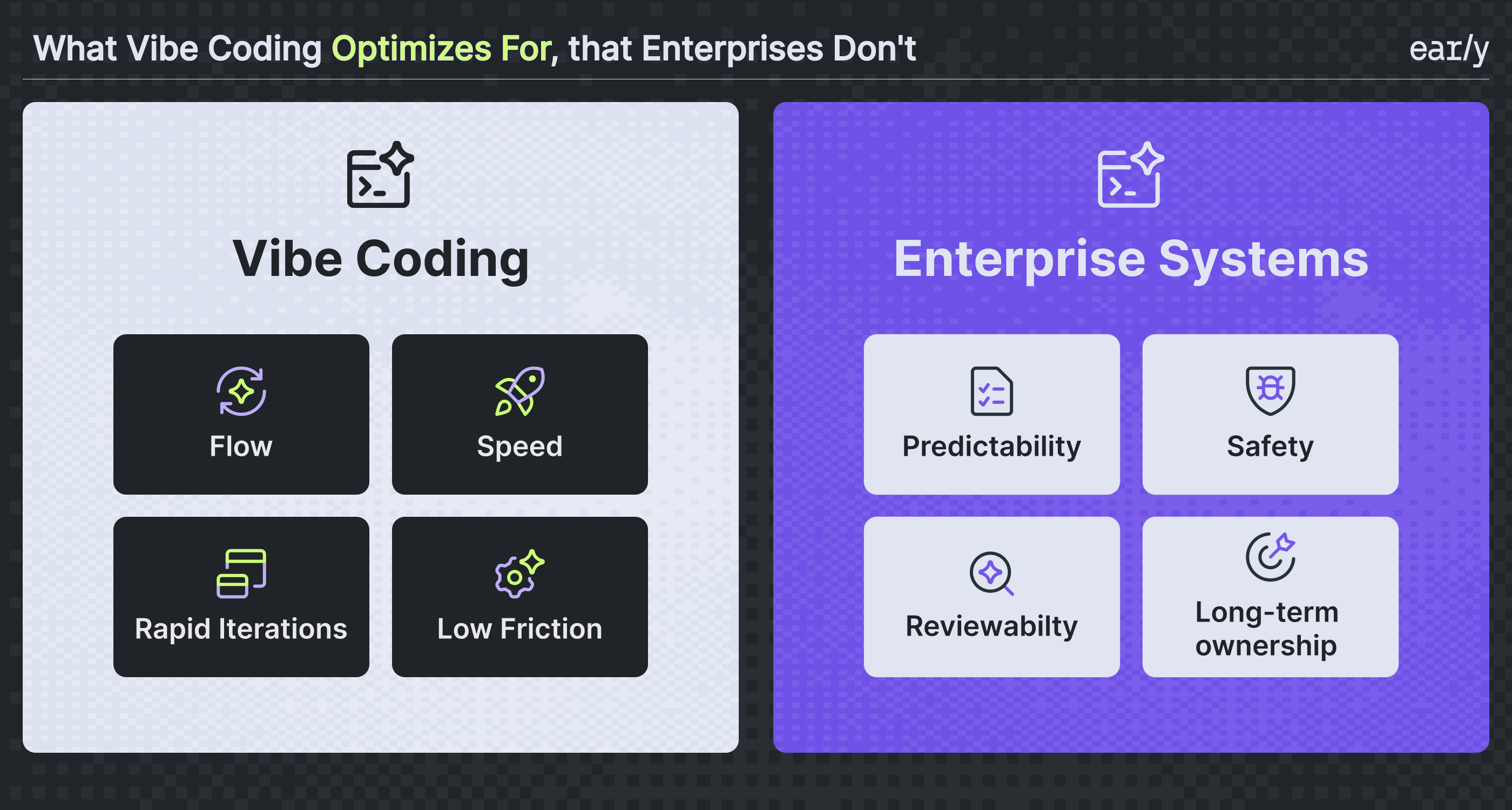

In practice, vibe coding optimizes for momentum: staying in flow and generating a lot of code quickly.

Enterprise software optimizes for safety. Changes need to be understandable, reviewable, and safe to operate over time, often by people who didn’t write the original code.

Production systems carry a lot of invisible context that isn’t present when code is generated. Even when the output looks clean, it doesn’t always fit the system it’s being added to.

That mismatch is where enterprise adoption starts to break down.

In enterprise environments, trust is not a secondary concern. It is the gate that determines whether AI-generated code ever reaches production.

The trust issue with AI-generated code isn’t about the majority of code that looks good. Most of the time, that code is genuinely impressive.

The problem is the hidden 5%, the edge case, the assumption, the subtle behavior change, that can break things in production. In enterprise systems, that level of risk is unacceptable.

Once teams know that risk exists, it changes how they treat all AI-generated code. Even the good parts get reviewed more aggressively. Changes slow down. Trust erodes, not just in a single pull request, but across the codebase.

And the problem doesn’t stop at shipping. That hidden complexity makes the code harder to maintain, harder to change, and harder for the next team to reason about.

For many enterprises, that’s enough to pull back entirely, or to limit AI-generated code to experiments and greenfield projects, where technical debt quietly accumulates and becomes a problem deferred to the future.

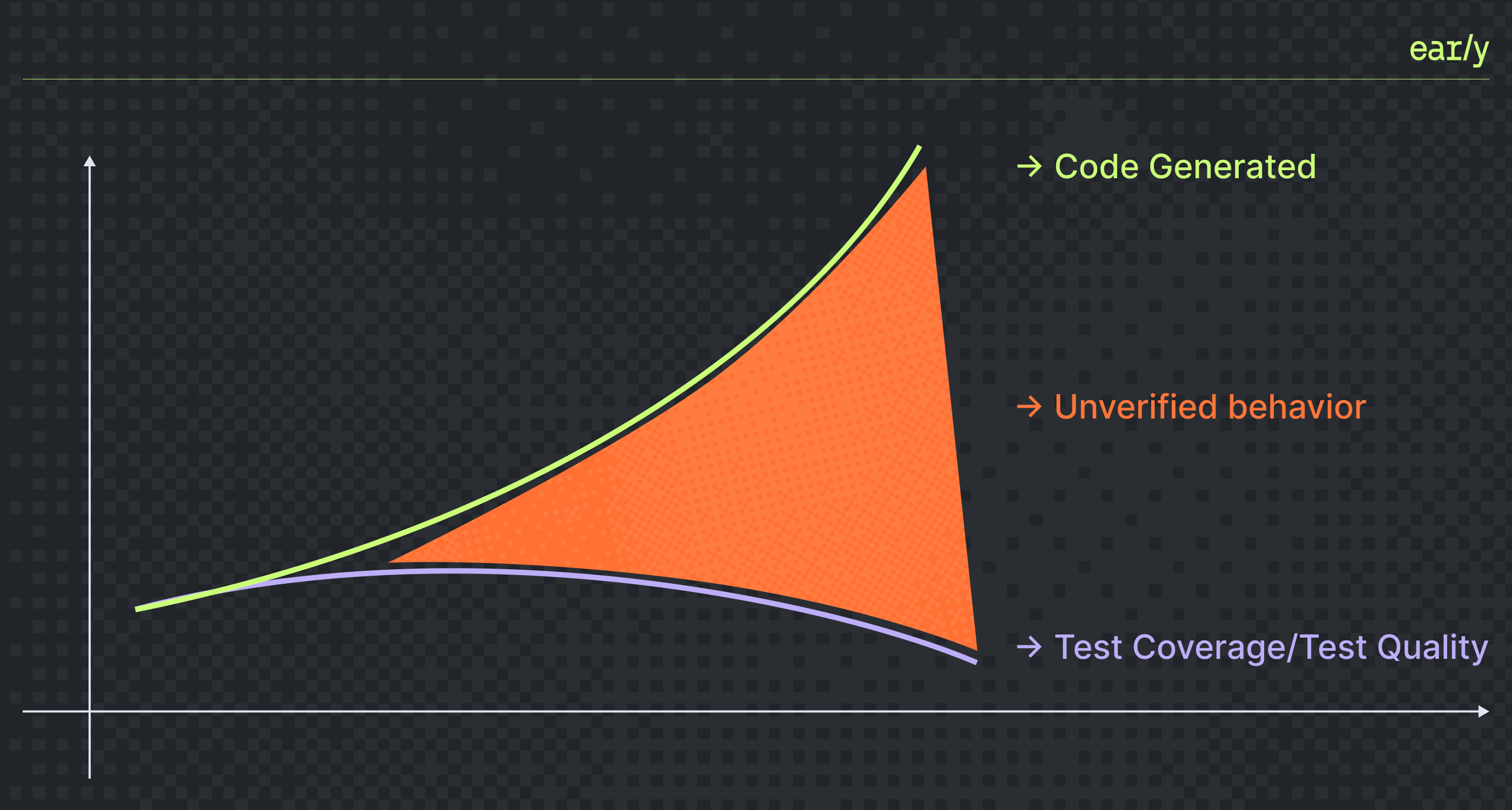

As teams adopt AI-generated code more broadly, code generation scales very quickly. Testing doesn’t.

Testing falls behind because AI-generated code increases behavioral complexity faster than most test strategies can adapt.

As new logic is introduced at scale, existing tests validate historical behavior while generated tests often lack the context needed to meaningfully assert correctness.

As more code is generated, tests are expected to cover more branches, more combinations, and more assumptions, yet test generation does not scale at the same pace. In most teams, though, tests are still written manually and often reactively.

What I see in practice is that test suites slowly fall behind. Coverage numbers don’t necessarily drop, but confidence does. Tests still pass, yet they stop exercising the most important parts of the new logic.

- Generated tests often don’t hold up, especially when tests are generated without sufficient context or validation. As code becomes more complex, the tests required to validate it do too. Many generated tests fail to compile, rely on brittle assumptions, or are hard to fix. Under time pressure, engineers discard them, leaving the most complex and risky logic untested.

- Existing tests can’t keep up. Most enterprise test suites are manually written and already lag behind development. When new code is generated faster than ever, these tests continue to validate limited or historical behavior, offering little protection for newly introduced logic.

The result is a growing gap between how fast code changes and how well it’s actually protected.

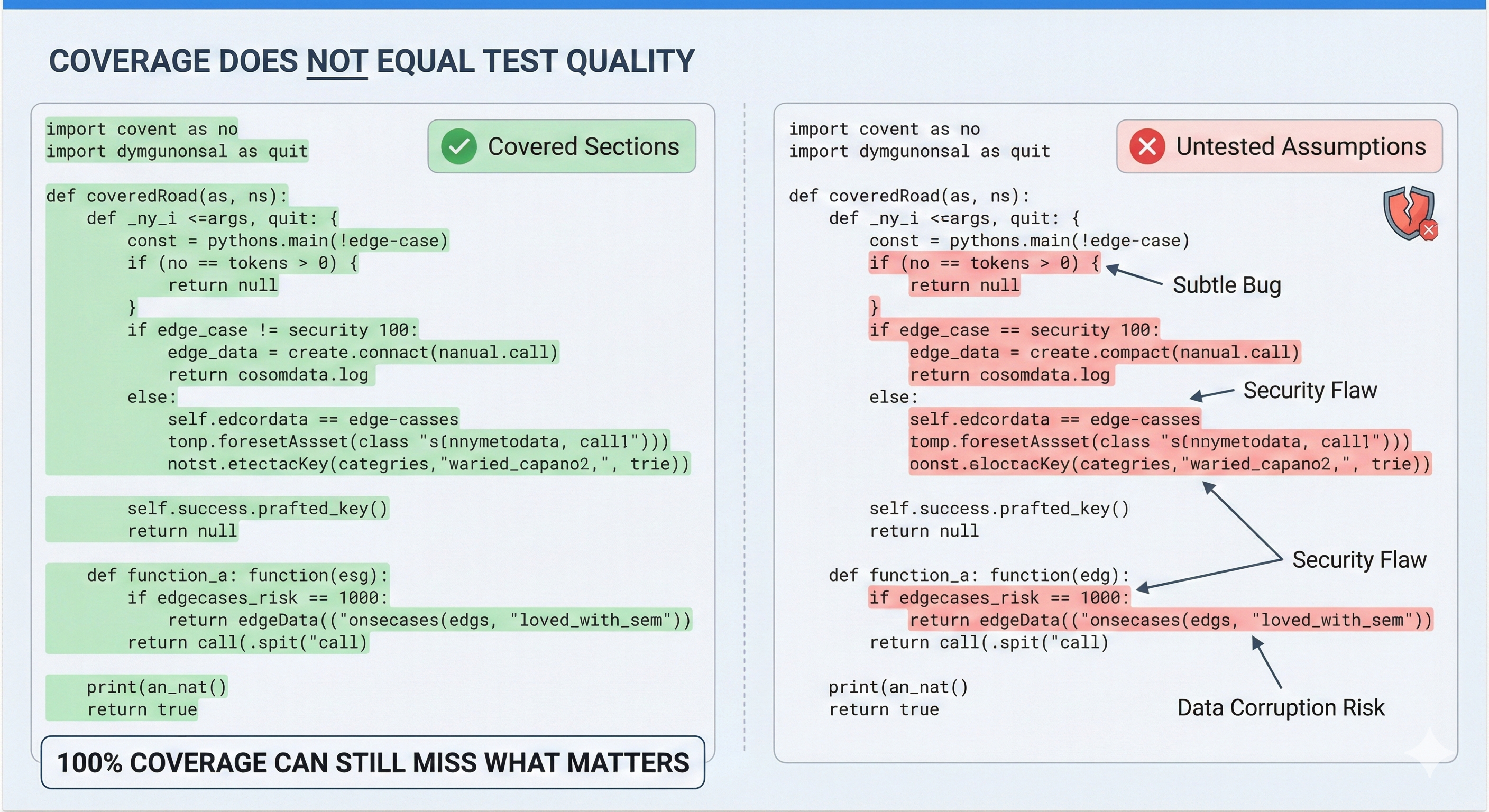

When adoption reaches production systems, teams often lean on coverage metrics for reassurance.

Coverage tells you what ran, not how well it was tested, which is why coverage alone is a misleading quality signal. High coverage does not mean high-quality tests, and it doesn’t mean new logic is actually validated.

With AI-generated code, large amounts of new behavior can be added while coverage barely moves. Tests execute lines of code, but miss assumptions, edge cases, and intent.

So builds stay green and coverage looks healthy, while risk quietly accumulates underneath.

For enterprises, the impact of AI-generated code doesn’t stop at the moment it’s merged.

Over time, that hidden complexity makes systems harder to understand and harder to change. New teams inherit behavior they didn’t design, logic they don’t fully trust, and tests that don’t clearly explain intent, making it difficult to measure test quality beyond basic coverage.

Small changes start to feel risky. Engineers hesitate, not because they don’t know how to write the code, but because they’re unsure what else it might affect.

This is where the real cost shows up: not in the first release, but in every change that comes after.

Vibe coding unlocked a new level of speed and creativity, and that matters. It changed what’s possible for individual developers and small teams.

But enterprises don’t operate on speed alone. They operate on trust, predictability, and the ability to change systems safely over time.

What’s missing for enterprise adoption isn’t better prompts or faster generation. It’s a way to make confidence scale alongside code. Without that, organizations will continue to slow AI adoption, not because they don’t believe in the technology, but because production systems demand more than momentum.

The next phase of AI-assisted development won’t be about writing more code.

It will be about building systems that teams can understand, trust, and evolve.

Vibe coding showed us what speed looks like.

Now the challenge is making it durable.

Q1: Why does AI-generated code slow down at enterprise scale instead of speeding teams up?

AI-generated code increases development speed initially, but at scale it introduces uncertainty around behavior, edge cases, and long-term impact. When teams cannot confidently validate changes, review effort increases and velocity drops.

Q2: Is the main risk of AI-generated code correctness or trust?

Trust is the primary risk. Most AI-generated code appears correct, but hidden assumptions and edge cases make teams less confident in deploying and maintaining it over time.

Q3: Why do existing test suites fail to protect AI-generated code?

Most enterprise test suites are designed to validate known behavior. AI-generated code introduces new logic faster than these tests can evolve, leaving critical paths unvalidated even when tests pass.

Q4: Does high test coverage reduce the risk of AI-generated code?

Not necessarily. Coverage shows which code paths ran, not whether important behaviors, assumptions, or failure modes were meaningfully tested.

Q5: Can enterprises safely adopt AI-generated code in production systems?

Yes, but only if validation, testing, and maintainability scale alongside code generation. Without that balance, risk accumulates faster than teams can manage.