Table of Contents

That trust is getting harder to build. Industry analysts predict that by 2027, 80% of engineering teams will use AI-powered coding tools. These tools accelerate development but amplify risk because more code gets written, reviewed, and merged faster than traditional QA can keep up. Each new commit increases the surface area for bugs, regressions, and integration failures that manual testing simply can’t catch in time.

An automated testing strategy is the essential framework that ensures quality and speed coexist. Here’s what that strategy looks like when it works and what happens when you don’t have one.

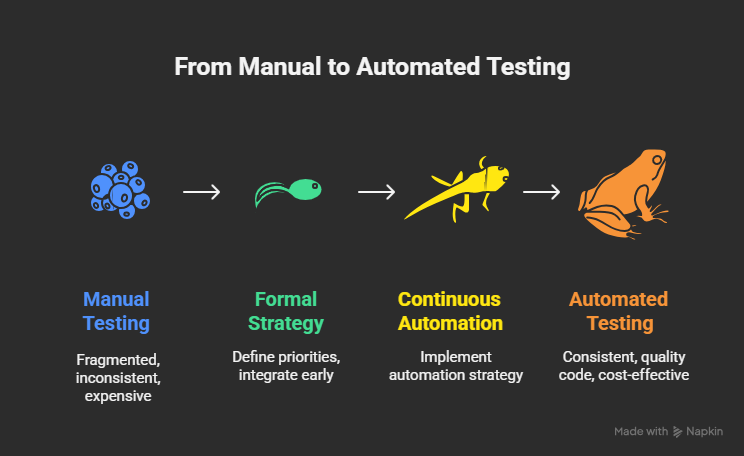

Manual testing always starts small and ends expensively. The costs add up quickly when testing is fragmented or inconsistent due to manual efforts. Teams constantly battle critical regressions in production, slowing release cycles and forcing developers to leave feature work to firefight issues. Organizations that implement a continuous automation strategy report up to 50%. savings

A formal strategy, not an ad-hoc effort, is what separates teams that consistently ship quality code from those consistently stuck in a reactive cycle.

Having a testing strategy doesn’t mean testing everything. It means testing what actually matters. The process involves defining what to test, why it’s worth testing, and how those checks support the release process.

The four core components of an effective automated testing strategy include:

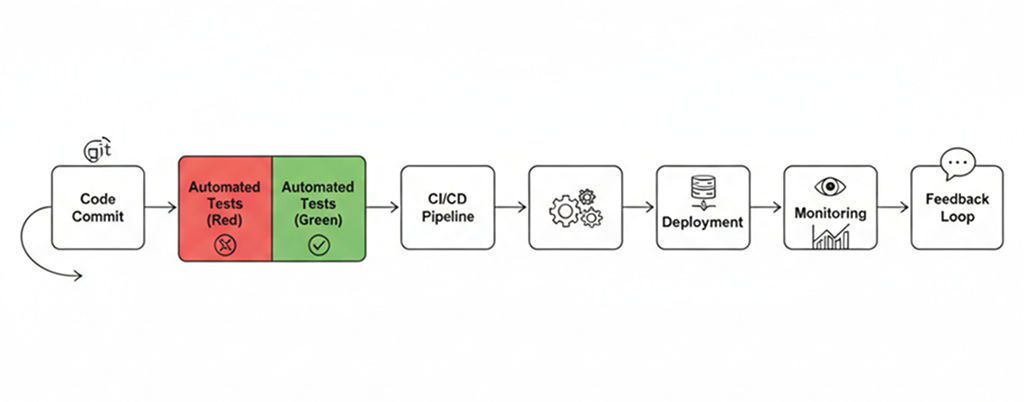

- CI/CD Integration: The strategy must embed testing as a critical part of the pipeline. This ensures tighter feedback loops, turning the CI/CD workflow into an active quality gate.

- Prioritized & Meaningful Coverage: The focus is on consistent test coverage for business logic and other high-risk functionalities rather than chasing vanity metrics. Engineering leads can decide which tests must run before merge, for example, enforcing red tests on critical payment flows while green tests protect stable modules post-deployment.

- Fast, Repeatable Validation: The test package must be quick and deterministic. If tests are slow and fail unpredictably, developers will only stop trusting them and find ways to bypass them.

- Reliability: The strategy must prioritize tests that catch real, high-impact bugs, not vanity coverage, with results that increase the team’s trust.

Instead of assigning test creation to individual developers’ discretion, engineering leaders define when and where automated tests are applied to align with business goals and risk tolerance.

When testing automation is built into the workflow, releases move faster, rollbacks drop, and everyone from the devs to leadership sleeps better at night. Here are the three key benefits of automated testing.

1. Accelerated Release Cycles

Automated feedback loops reduce the validation process from days to minutes, assisting with immediate feedback directly within the CI/CD pipeline.

Faster automated validation reduces the lengthy back-and-forth in code reviews, allowing developers to merge and deploy faster. This performance pattern reflects findings from DORA research, which consistently shows that elite-performing teams combine high deployment frequency with low change-failure rates. Robust automation is at the heart of this feat.

2. Improved Code Quality and Coverage

A real testing strategy starts with knowing which parts of the codebase you can’t afford to break and building guardrails around them first. Those guardrails combine pre-commit checks, CI enforcement, regression tests, and live monitoring, a chain that quickly stops bad code and surfaces real issues.

A balanced suite mixes red tests that catch new issues before they ship and green tests that guard against regressions. Over time, that suite becomes living documentation, a record of how the system is meant to behave, much like LLM training cycles that refine accuracy through iteration, and a safety net that grows with the codebase.

3. Reduced Production Failures and Hotfix Stress

A reliable test suite is the only thing between a calm release and a 2 AM rollback. It catches bugs before users do and stops bad commits from ever reaching production, and the panic cycle breaks. Incidents shrink from emergencies to line items on tomorrow’s to-do list.

That reliability changes how people write code. Refactoring a shared billing module or untangling a thousand-line controller no longer feels like walking on glass. The suite shouts when something breaks, so developers stop guessing. Confidence builds, cleanup accelerates, and fragile patches finally disappear. What used to be “too risky to touch” becomes part of everyday work again.

Trying to “do test automation” without a clear strategy often creates more chaos than value. Teams start with good intentions, but those tests quickly lose relevance without structure, purpose, or prioritization.

Most teams fall into the same traps. They measure coverage instead of confidence, test everything except the risky parts, and let brittle tests erode trust in the suite. Over time, automation becomes another layer of tech debt that slows everyone down.

Where teams commonly fail:

- Chasing vanity coverage by padding the suite with low-value tests while core flows like auth and payments stay exposed.

- Writing brittle assertions that collapse after harmless refactors because mocks and hard-coded data can’t keep up.

- Letting ownership blur between dev and QA until no one maintains the suite, a gap often filled by managed security service providers in large organizations.

- Leaning too hard on UI tests that flake for reasons unrelated to bugs.

- Falling back to manual QA when automation loses trust, turning every release into another round of firefighting.

Developers waste time fixing low-impact tests without a coherent strategy while high-revenue features remain unprotected, leaving room for cybersecurity concerns that often go unnoticed in fragmented testing setups. A clear strategy reverses that. It defines priorities, ownership, and meaningful coverage. More importantly, it makes the suite something the entire team can trust again.

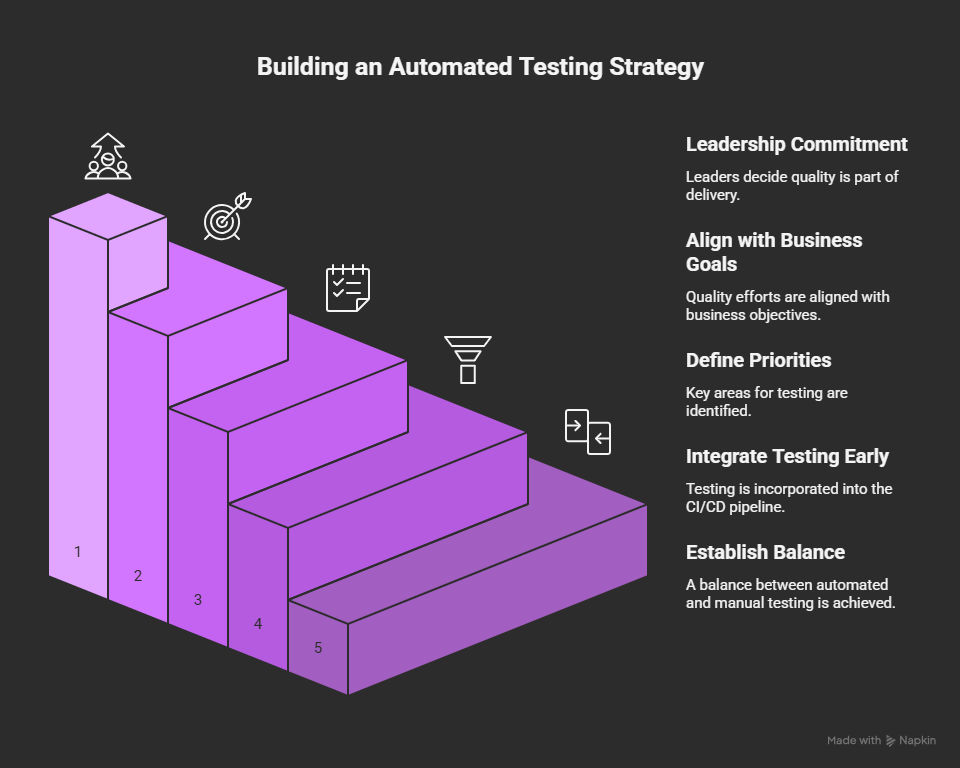

A solid automated testing strategy begins with leadership deciding that quality is part of delivery. It involves a series of top-down, practical decisions to align quality efforts with business goals. Here are the five practical steps leaders can follow.

Step 1: Define Priorities

The first step is deciding what actually needs protection. A strong strategy begins with a risk-based view of your system rather than a “test everything” checklist. First, focus on what breaks revenue, user trust, or core functionality, then expand outward.

How to do it:

- Start with the flows that make or break the product, such as logins, payments, and API testing.

- Weigh impact against how often things change; risk lives where code moves fastest.

- Stack priorities: high, medium, low.

- Write down why they matter.

- Recheck every quarter; systems drift fast.

Step 2: Integrate Testing Early in CI/CD

The goal is to make every commit trigger meaningful checks before it reaches staging. When testing is baked into CI/CD, feedback comes fast, breakages surface early, and releases stop relying on “hope and rollback.”

How to do it:

- Run unit and smoke tests on every pull request; a failed test blocks the merge.

- Add pre-merge gates so nothing hits main without passing critical paths.

- Keep feedback under ten minutes by parallelizing and caching.

- Shift integration tests left and mock smartly.

- Automate rollback checks; confirm stability before traffic moves.

Step 3: Use Automation to Fill Gaps

Developers skip tests, not out of neglect, but because deadlines and complexity make them the first to cut. A good testing strategy plans for this reality instead of pretending it doesn’t exist. Automation should fill the blind spots humans leave behind, especially in areas where manual testing is slow, repetitive, or easy to forget.

How to do it:

- Automate regression checks around fragile or legacy code to catch silent breakage.

- Generate coverage reports that flag risky, high-change files instead of vanity metrics.

- Integrate static analysis to surface unsafe patterns early.

- Use AI or scripts to scaffold baseline tests fast.

- Schedule nightly smoke runs for critical user paths.

Step 4: Establish a Balance

A healthy test suite is built on balance. Too many “red tests” slow development under constant noise. Too few, and regressions slip through. A mature testing strategy finds the right mix between red tests, which hunt for new bugs, and green tests, which protect what already works.

How to do it:

- Define what’s red and what’s green. Red hunts for new bugs in unstable code; green protects what works.

- Run reds off-hours or nightly, keep greens on every pull request.

- Treat red failures as clues, not disasters.

- Watch the ratio. Too many reds mean noise; too few mean blind spots.

- Label test tiers so everyone knows which failures demand action and which just need a look.

Step 5: Make Test Generation a Leadership-Driven Decision

Test creation can't be left to chance or individual discretion. When test generation is optional, it’s the first thing to drop under deadline pressure. A mature testing strategy treats test generation as an organizational standard. Leadership must define when and where tests are required, and ensure tools enforce that automatically.

How to do it:

- Set clear testing rules so every new feature, module, or API route includes required tests by default.

- Automate enforcement in CI/CD so untested code can’t merge quietly.

- Let tech leads tune scope by risk and change rate; keep policy flexible, not rigid.

- Use Early Catch to scale enforcement across teams. It plugs into pull requests, auto-generates missing tests, flags coverage gaps, and keeps testing rules alive in real workflows.

- Track real signals like rule compliance, skipped tests, and defect trends instead of bragging about raw coverage.

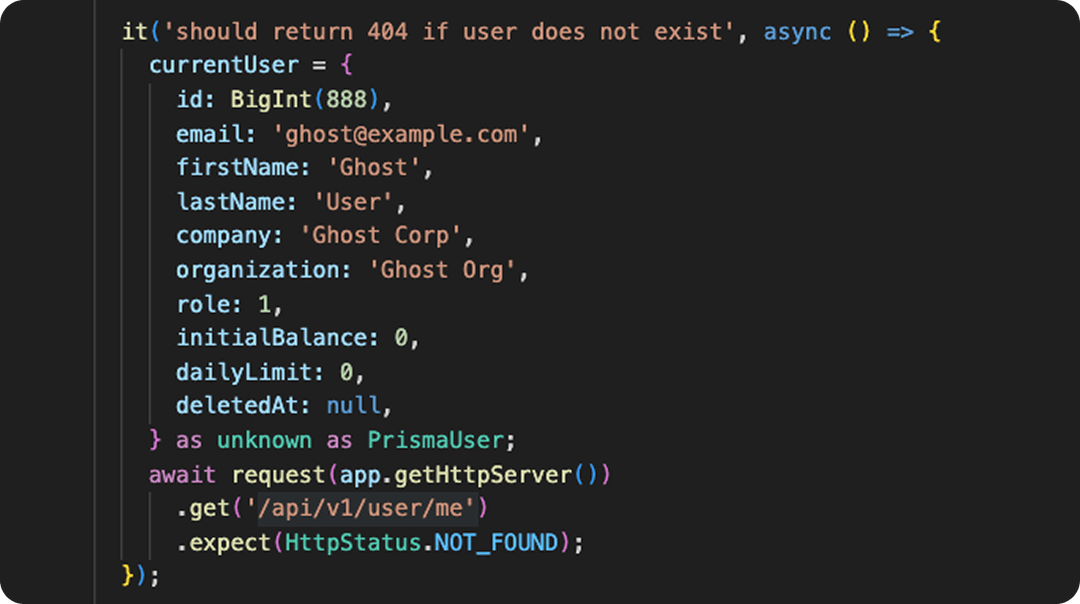

Example of a unit test that validates edge cases (404 response). Automated test generation tools like Early can instantly create or augment such tests within your CI pipeline.

A proper automated testing strategy gives developers a clear map of what’s critical, automates the boring parts, and forces feedback early enough to prevent panic later. The payoff isn’t “more tests” but fewer surprises, fewer 2 AM rollbacks, and a team that ships with confidence instead of anxiety.

Early Catch was built for that kind of discipline. It lets engineering leaders set the testing rules once and know they’ll be followed on every pull request. There is no babysitting, no begging for coverage, just enforced consistency that makes releases predictable again.

Explore how Early Catch makes leadership-guided, automated testing possible. Book a demo today.